May 4, 2023

Microsoft Is Helping Finance AMD’s Expansion Into AI Chips

, Bloomberg News

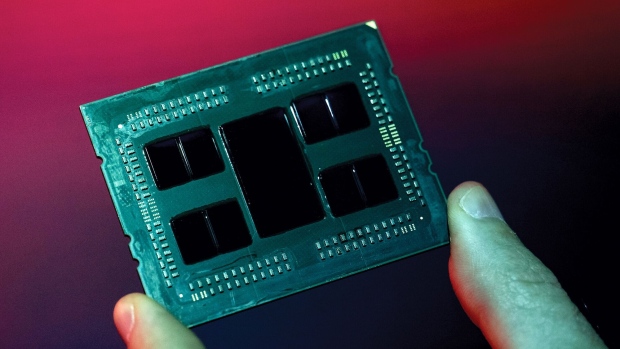

(Bloomberg) -- Microsoft Corp. is working with Advanced Micro Devices Inc. on the chipmaker’s expansion into artificial intelligence processors, according to people with knowledge of the situation, part of a multipronged strategy to secure more of the highly coveted components.

The companies are teaming up to offer an alternative to Nvidia Corp., which dominates the market for AI-capable chips called graphics processing units, said the people, who asked not to be identified because the matter is private. The software giant is providing support to bolster AMD’s efforts, including engineering resources, and working with the chipmaker on a homegrown Microsoft processor for AI workloads, code-named Athena, the people said.

Frank Shaw, a Microsoft spokesman, denied that AMD is part of Athena. “AMD is a great partner,” he said. “However, they are not involved in Athena.”

AMD shares jumped more than 6.5% on Thursday, and Microsoft gained about 1%. AMD representatives declined to comment. Nvidia stock declined 1.9%.

The arrangement is part of a broader rush to augment AI processing power, which is in great demand after the explosion of chatbots like ChatGPT and other services based on the technology. Microsoft is both a top provider of cloud-computing services and a driving force of AI use. The company has pumped $10 billion into ChatGPT maker OpenAI, and has vowed to add such features to its entire software lineup.

Read More: Microsoft to Bring OpenAI’s Chatbot Technology to Office Apps

The move also reflects Microsoft’s deepening involvement in the chip industry. The company has been building up a silicon division over the past several years under former Intel Corp. executive Rani Borkar, and the group now has a staff of almost 1,000 employees. The Information last month reported on Microsoft’s development of the Athena artificial-intelligence chip.

Several hundred of those employees are working on the Athena project, and Microsoft has spent about $2 billion on its chip efforts, according to one of the people. But the undertaking doesn’t portend a split with Nvidia. Microsoft intends to keep working closely with that company, whose chips are the workhorses for training and running AI systems. It’s also trying to find ways to get more Nvidia’s processors, underscoring the urgent shortage Microsoft and others are facing.

Microsoft’s relationship with OpenAI — and its own slate of newly introduced AI services — are requiring computing power at a level beyond what the company expected when it ordered chips and set up data centers. OpenAI’s ChatGPT service has drawn interest from businesses that want to use it as part of their own products or corporate applications, and Microsoft has introduced a chat-based version of Bing and new AI-enhanced Office tools.

It’s also updating older products like GitHub’s code-generating tool. All of those AI programs run in Microsoft’s Azure cloud and require the pricey and powerful processors Nvidia provides.

The area is also a key priority for AMD. “We are very excited about our opportunity in AI — this is our No. 1 strategic priority,” Chief Executive Officer Lisa Su said during the chipmaker’s earnings call Tuesday. “We are in the very early stages of the AI computing era, and the rate of adoption and growth is faster than any other technology in recent history.”

Su also said that AMD has an opportunity to make partly customized chips for its biggest customers to use in their AI data centers.

From Bloomberg Businessweek: Doing, Not Just Chatting, Is the Next Stage of the AI Hype Cycle

Borkar’s team at Microsoft, which has also worked on chips for servers and Surface computers, is now prioritizing the Athena project. It’s developing a graphics processing unit that can be used for training and running AI models. The product is already being tested internally and could be more widely available as soon as next year, said one of the people.

Even if the project makes that timeline, a first version is just a starting point, the people said. It takes years to build a good chip, and Nvidia has a substantial head start. Nvidia is the chip supplier of choice for many providers of tools for generative AI, including Amazon.com Inc.’s AWS and Google cloud, and Elon Musk has secured thousands of its processors for his fledgling AI business, according to reports.

Creating an alternative to Nvidia’s lineup will be a challenging task. That company offers a package of software and hardware that works together — including chips, a programming language, networking equipment and servers — letting customers rapidly upgrade their capabilities.

That’s one of the reasons Nvidia has become so dominant. But Microsoft isn’t alone in trying to develop in-house AI processors. Cloud rival Amazon acquired Annapurna Labs in 2016 and has developed two different AI processors. Alphabet Inc.’s Google also has a training chip of its own.

(Adds Microsoft comment in third paragraph. An earlier version corrected the headline and second paragraph to remove references to financing)

©2023 Bloomberg L.P.