Aug 16, 2021

Tesla autopilot probed by U.S. over crash-scene collisions

, Bloomberg News

Tesla spends $0 on ads as vehicle sales climb

The U.S. opened a formal investigation into Tesla Inc.’s Autopilot system after almost a dozen collisions at crash scenes involving first-responder vehicles, stepping up its scrutiny of a system the carmaker has charged thousands of dollars for over the last half decade.

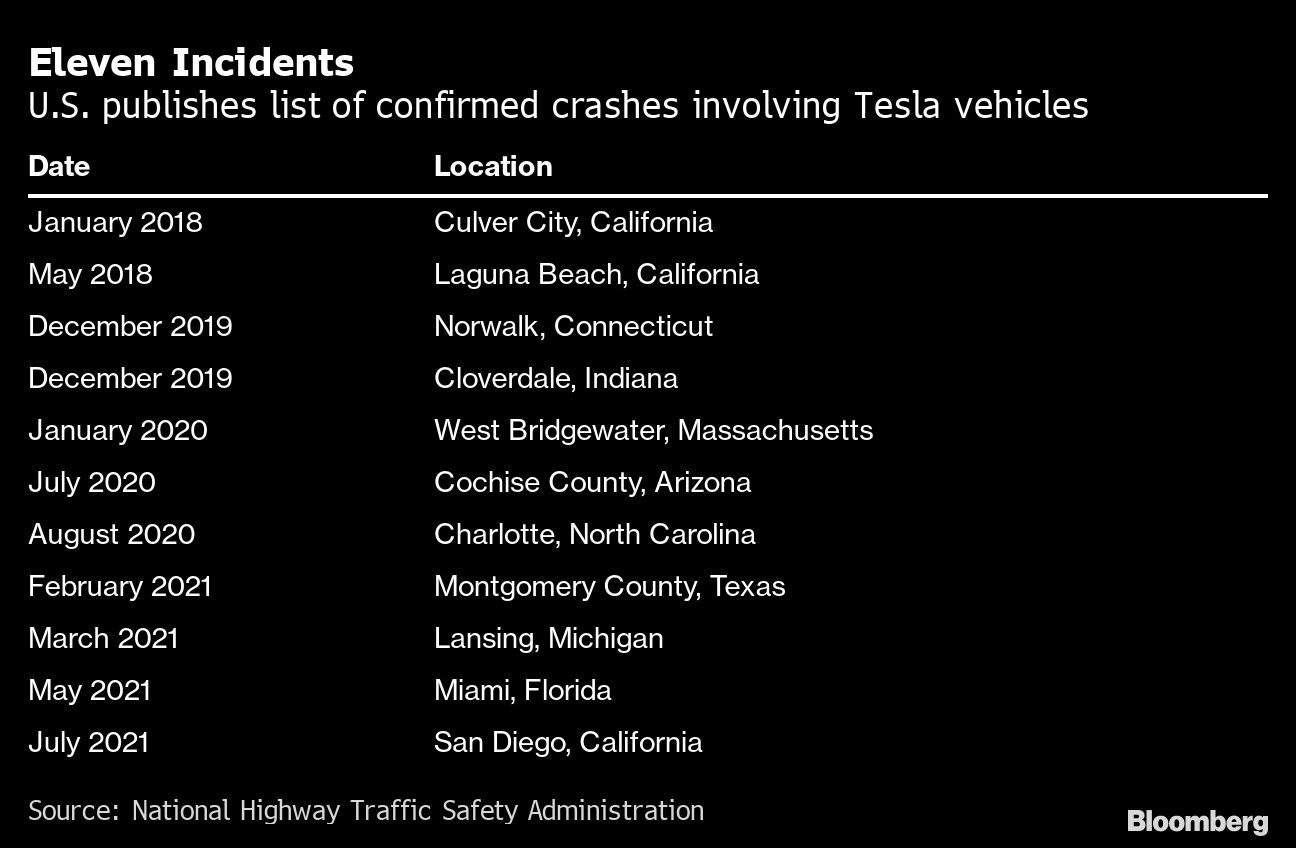

The probe by the National Highway Traffic Safety Administration covers an estimated 765,000 Tesla Model Y, X, S and 3 vehicles from the 2014 model year onward. The regulator -- which has the power to deem cars defective and order recalls -- said it launched the investigation after 11 crashes that resulted in 17 injuries and one fatality.

“Most incidents took place after dark and the crash scenes encountered included scene-control measures such as first-responder vehicle lights, flares, an illuminated arrow board and road cones,” the agency said in the document. “The involved subject vehicles were all confirmed to have been engaged in either Autopilot or Traffic Aware Cruise Control during the approach to the crashes.”

Tesla shares fell as much as 5.7 per cent before settling down 4.9 per cent to US$682.04 at 12:51 p.m. in New York. Representatives for the electric-car maker didn’t immediately respond to a request for comment.

Autopilot is Tesla’s driver-assistance system that maintains vehicles’ speed and keeps them centered in lanes when engaged, though the driver is supposed to supervise at all times. The company has been criticized for years for naming the system in a potentially misleading way. Since late 2016, it has marketed higher-level functionality called Full Self-Driving Capability. It now sells that package of features -- often referred to as FSD -- for US$10,000 or a US$199 a month.

A NHTSA spokesperson said the agency’s preliminary investigation will focus on Tesla’s autopilot system and “the technologies and methods used to monitor, assist, and enforce the driver’s engagement with driving while Autopilot is in use.” The agency is looking at accidents in which one of two Tesla systems -- Autopilot or Traffic Aware Cruise Control -- was engaged.

Auto safety advocates applauded the move.

“We are glad to see NHTSA finally acknowledge our long standing call to investigate Tesla for putting technology on the road that will be foreseeably misused in a way that is leading to crashes, injuries and deaths,” said Jason Levine, the executive director of the Center for Auto Safety. “This probe needs to go far beyond crashes involving first responder vehicles because the danger is to all drivers, passengers and pedestrians when Autopilot is engaged.”

NHTSA investigated Tesla’s Autopilot in the wake of a 2016 fatal crash and cleared the system early the following year. The regulator has opened at least 30 special crash investigations involving Tesla cars that it suspected were linked to Autopilot, with the pace of probes picking up under the Biden administration.

The first of the 11 crashes that prompted the latest probe occurred in January 2018 in Culver City, California, according to NHTSA. The most recent incident occurred July 10 in San Diego. Others occurred in Florida, Michigan, Texas, Arizona, Massachusetts, Indiana and Connecticut.

NHTSA announced in June that it would order car manufacturers to report crashes involving automated-driving technology within one day of learning of such incidents. The agency had largely taken a hands-off approach to regulating driver-assistance systems up to that point so as not to stand in the way of their potential safety benefits.

Jake Fisher, director of auto testing at Consumer Reports, said Autopilot and other hands-free driving systems now offered on cars need technology trained on drivers to make sure they are watching the road when using the feature.

“NHTSA, in no uncertain terms, needs to understand what’s going on and do something to help prevent these types of crashes, not just in Teslas, but in other vehicles that use this technology,” Fisher said in an interview. “A more robust way of assuring that the driver is looking at the road is the only way we’re going to keep people safe.”

Fisher pointed to General Motors Co.’s Super Cruise, which uses cameras to monitor the driver’s gaze and to make sure they are watching the road while using the technology. For years, Tesla only attempted to monitor if there was a hand on the wheel and did not have cameras monitoring the driver.

In April, Consumer Reports “easily tricked” Tesla’s Autopilot system to operate while no one was sitting in the driver’s seat. Fisher also has long been critical of the name of Tesla’s system.

“For a lot of people, Autopilot means that the car is self-driving,” Fisher said. “But regardless of what they call it, the experience behind the wheel leads to over-reliance.”