Mar 18, 2024

Nvidia Unveils Successor to Its All-Conquering AI Processor

, Bloomberg News

(Bloomberg) -- Nvidia Corp. Chief Executive Officer Jensen Huang showed off new chips aimed at extending his company’s dominance of artificial intelligence computing, a position that’s already made it the world’s third-most-valuable business.

A new processor design called Blackwell is multiple times faster at handling the models that underpin AI, the company said at its GTC conference on Monday in San Jose, California. That includes the process of developing the technology — a stage known as training – and the running of it, which is called inference.

The Blackwell chips, which are made up of 208 billion transistors, will be the basis of new computers and other products being deployed by the world’s largest data center operators — a roster that includes Amazon.com Inc., Microsoft Corp., Alphabet Inc.’s Google and Oracle Corp. Blackwell-based products will be available later this year, Nvidia said.

Blackwell — named after David Blackwell, the first Black scholar inducted into the National Academy of Science — has a tough act to follow. Its predecessor, Hopper, fueled explosive sales at Nvidia by building up the field of AI accelerator chips. The flagship product from that lineup, the H100, has become one of the most prized commodities in the tech world — fetching tens of thousands of dollars per chip.

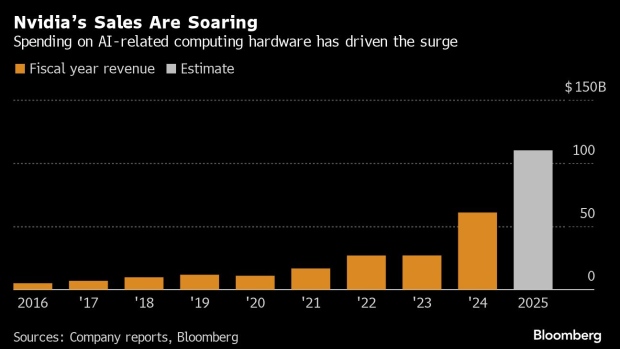

The growth has sent Nvidia’s valuation soaring as well. It is the first chipmaker to have a market capitalization of more than $2 trillion and trails only Microsoft and Apple Inc. overall.

The announcement of new chips was widely anticipated, and Nvidia’s stock was up 79% this year through Monday’s close. That made it hard for the presentation’s details to impress investors, who sent the shares down as much as 3.9% in New York on Tuesday.

Read More: YOLO Crowd Bets Nvidia Could More Than Double in Value by Friday

Huang, Nvidia’s co-founder, said AI is the driving force in a fundamental change in the economy and that Blackwell chips are “the engine to power this new industrial revolution.”

Nvidia is “working with the most dynamic companies in the world, we will realize the promise of AI for every industry,” he said at Monday’s conference, the company’s first in-person event since the pandemic.

Nvidia didn’t discuss pricing of the new processors, but chips in the Hopper line have gone for an estimated $30,000 to $40,000 — with some resellers offering them for multiple times that amount. Huang told CNBC on Tuesday that Blackwell chips will be in a similar range.

The new design has so many transistors — the tiny switches that give semiconductors their ability to store and process information — that it’s too big for conventional production techniques. It’s actually two chips married to each other through a connection that ensures they act seamlessly as one, the company said. Nvidia’s manufacturing partner, Taiwan Semiconductor Manufacturing Co., will use its 4NP technique to produce the product.

Why Nvidia’s New Blackwell Chip Is Key to AI: QuickTake

Blackwell will also have an improved ability to link with other chips and a new way of crunching AI-related data that speeds up the process. It’s part of the next version of the company’s “superchip” lineup, meaning it’s combined with Nvidia’s central processing unit called Grace. Users will have the choice to pair those products with new networking chips — one that uses a proprietary InfiniBand standard and another that relies on the more common Ethernet protocol. Nvidia is also updating its HGX server machines with the new chip.

The Santa Clara, California-based company got its start selling graphics cards that became popular among computer gamers. Nvidia’s graphics processing units, or GPUs, ultimately proved successful in other areas because of their ability to divide up calculations into many simpler tasks and handle them in parallel. That technology is now graduating to more complex, multistage tasks, based on ever-growing sets of data.

Blackwell will help drive the transition beyond relatively simple AI jobs, such as recognizing speech or creating images, the company said. That might mean generating a three-dimensional video by simply speaking to a computer, relying on models that are have as many as 1 trillion parameters.

For all its success, Nvidia’s revenue has become highly dependent on a handful of cloud computing giants: Amazon, Microsoft, Google and Meta Platforms Inc. Those companies are pouring cash into data centers, aiming to outdo their rivals with new AI-related services.

The challenge for Nvidia is broadening its technology to more customers. Huang aims to accomplish this by making it easier for corporations and governments to implement AI systems with their own software, hardware and services.

Huang’s speech kicks off a four-day GTC event that’s been called a “Woodstock” for AI developers. Here are some of the highlights from the presentation:

- Nvidia’s Omniverse software and services, which allow users to create digital twins of real-world items, is coming to Apple’s Vision Pro headset. Nvidia data centers will send images and video to the device, providing users with a more lifelike experience.

- Siemens AG has integrated Omniverse into its Xcelerator industrial design software. Shipbuilder HD Hyundai will use the technology to save time and money during construction by building complete vessels in the virtual world first.

- Chinese electric vehicle maker BYD Co. is switching to Nvidia chips, software and services throughout its operations. This includes using Nvidia for the electronic brains of cars, vehicle design and the robots in its factories. Omniverse also will be used to help car buyers to configure their vehicles.

- Project Groot, consisting of a new computer based on Blackwell, is being made available for makers of humanoid robots. It will allow the robots to understand natural language and copy human movements by observing them. Developing such skills is “one of the most exciting problems to solve in AI today,” Huang said.

- Johnson & Johnson is using Nvidia technology to speed up the development of AI-related software that will use advanced analytics in surgery.

Huang concluded the event by having two robots join him on stage, saying they were trained with Nvidia’s simulation tools.

“Everything that moves in the future will be robotic,” he said.

©2024 Bloomberg L.P.