Oct 30, 2023

Biden signs sweeping executive order regulating artificial intelligence

, Bloomberg News

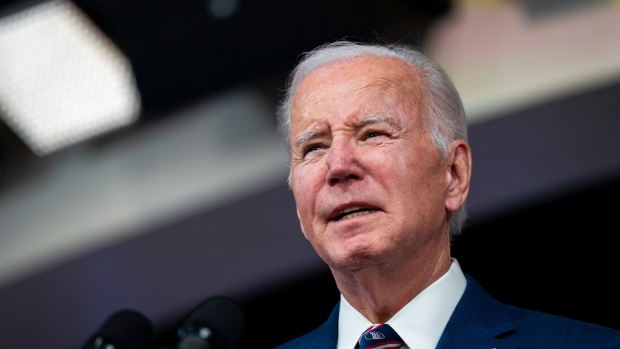

U.S. President Joe Biden signed an executive order on artificial intelligence that establishes standards for security and privacy protections and requires developers to safety-test new models — casting it as necessary regulation for the emerging technology.

“To realize the promise of AI and avoid the risk, we need to govern this technology,” Biden said at a White House event Monday, detailing his most significant action yet on a technology whose practical applications and public use have skyrocketed in recent months.

The order will have broad impacts on companies developing powerful AI tools that could threaten national security. Leading developers such as Microsoft Corp., Amazon.com Inc and Alphabet Inc.’s Google will need to submit test results on their new models to the government before releasing them to the public.

Biden said the Commerce Department will also develop standards for watermarking AI-generated content, such as audio or images, often referred to as “deepfakes.”

“That way you can tell whether it’s real or it’s not,” Biden said, noting that “AI devices are being used to deceive people.”

Biden said he had watched deepfakes of himself speaking and marvelled at how realistic the images appeared, often asking himself, “When the hell did I say that?”

The rule aims to leverage the US government’s position as a top customer for big tech companies to vet technology with potential national or economic security risks and health and safety impacts. Bloomberg Government earlier reported on a draft of the order.

The order will marshal federal agencies across government. Biden said he will direct the Department of Energy to ensure AI systems don’t pose chemical, biological or nuclear risks and the Departments of Defense and Homeland Security to develop cyber protections to make computers and critical infrastructure safer.

Congressional action

Biden said he would also meet Tuesday with Senate Majority Leader Chuck Schumer and lawmakers from both parties at the White House on artificial intelligence and passing legislation on privacy concerns. Biden has repeatedly pushed lawmakers to approve privacy protections.

Schumer has called for the US to spend at least $32 billion to boost AI research and development and said Monday that he hoped to have legislation setting standards for the technology ready in the coming months.

Lawmakers have been holding briefings and meeting with tech representatives, including Meta Platforms Inc.’s Mark Zuckerberg and OpenAI’s Sam Altman, to better understand the technology before drafting legislation. Venture capitalists Marc Andreessen and John Doerr have also participated in the closed-door sessions.

Monday’s action builds on voluntary commitments to securely deploy AI adopted by more than a dozen companies over the summer at the White House’s request and its blueprint for an “AI Bill of Rights,” a guide for safe development and use.

“This executive order sends a critical message: that AI used by the United States government will be responsible AI,” IBM Corp. Chairman and Chief Executive Officer Arvind Krishna said in a statement. IBM Vice Chairman Gary Cohn, former President Donald Trump’s National Economic Council director, attended Monday’s event.

Microsoft views the order as “another critical step forward in the governance of AI technology,” Vice Chairman and President Brad Smith said in a statement.

Biden’s directive precedes a trip by Vice President Kamala Harris and industry leaders to a UK-hosted summit about AI risks, giving Harris a US plan to present on the world stage.

“Technology with global impact requires global action,” Harris said Monday. “We will work with our allies and our partners to apply existing international rules and norms with a purpose to promote global order and stability.”

The U.S. set aside US$1.6 billion in fiscal 2023 for AI, a number that’s expected to increase as the military releases more detail about its spending, according to Bloomberg Government data.

Algorithmic bias

Biden has repeatedly called for guidance to be issued that safeguards Americans from algorithmic bias in housing, government benefits programs and by federal contractors.

The Justice Department warned in a January filing that companies that sell algorithms to screen potential tenants are liable under the Fair Housing Act if they discriminate against Black applicants. Biden directed the department to establish best practices for investigating and prosecuting such civil-rights violations related to AI, including in the criminal justice system.

The order also asks immigration officials to lessen visa requirements for overseas talent seeking to work at American AI companies.

With assistance from Jordan Fabian, Amanda Allen and Jenny Leonard.