Apr 11, 2023

Amazon’s Twitch Safety, AI Ethics Job Cuts Raise Concerns Among Ex-Workers

, Bloomberg News

(Bloomberg) -- Job cuts at Amazon.com Inc.’s Twitch division are raising concerns among former employees and content monitors about the popular livestreaming site’s ability to police abusive or illegal behavior — issues that have plagued the business since its inception.

Layoffs at Twitch eliminated about 15% of the staff responsible for monitoring such behavior, according to former employees with knowledge of the matter. The company also folded a new team monitoring the ethics of its AI efforts, said the people, who asked not to be identified to protect their job prospects.

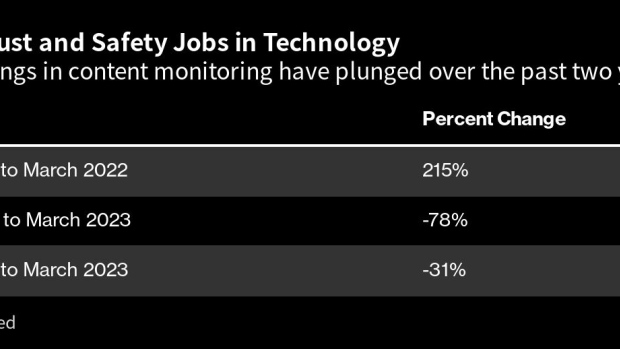

Since late 2022, technology companies have cut more than 200,000 jobs, including trust and safety positions and contractors, at Meta Platforms Inc., Alphabet Inc. and Twitter. Job postings including the words “trust and safety” declined 78% in March 2023 from a year ago, according to the job-listing site Indeed. Technology companies also thinned their responsible AI and diversity teams.

“Laying off the trust and safety team can severely hinder efforts to reduce online hate and abuse — especially when it comes to radicalization and child safety” and could “open Twitch up to legal liability,” said Kat Lo, who is content moderation lead for Meedan, a nonprofit software company. “Trust and safety is also responsible for auditing new features to minimize the potential for abuse.”

In interviews with Bloomberg News, four former Twitch employees with knowledge of trust and safety or AI operations questioned whether executives at the company or Amazon are appropriately prioritizing moderation and building AI tools consciously.

“We were seen more as a cost center, not a revenue generator,” one former employee said.

With the job cuts, the company lost a lot of institutional knowledge in those teams, one person said. The cuts fell especially hard on people who were in charge of appeals from streamers who had been banned or suspended, another person said. Some of that work will be outsourced, the people said.

Executives at Twitch and Amazon are increasingly focused on reducing losses at the livestreaming business. Besides cutting jobs, they have also revamped creator pay. Amazon purchased Twitch for $970 million in 2014. Viewership since then has risen more than sixfold to 2.4 million people at any given time.

Over that stretch, the costs associated with operating the service have also ballooned. Although Twitch receives a discounted rate on hosting services from Amazon, running an international livestreaming service is enormously expensive, executives have told Bloomberg.

“User and revenue growth have not kept pace with our expectations,” new Chief Executive Officer Dan Clancy wrote in a March blog post announcing job cuts at the company.

The company declined to comment on the record.

With Twitch airing 2.5 million hours of live user-generated content daily and no one monitoring videos before they air, the company requires sophisticated policing tools. Several terrorist attacks were livestreamed on the platform, including the 2022 supermarket shooting in Buffalo, New York. Twitch has also struggled to protect children and prevent grooming on the platform, a Bloomberg investigation found.

Senior Twitch officials met last month with representatives of the UK regulator Ofcom to discuss the company’s plans to combat child grooming on the platform. The UK regulator is reviewing whether Twitch’s moderation capabilities are robust enough to prevent illegal or harmful material, an agency spokesperson said.

“We will be closely monitoring the efficacy of these measures as they are rolled out,” the Ofcom spokesperson said.

For years, Twitch allowed the toxicity associated with gaming culture to run rampant. As the platform became more mainstream, the company invested in moderation tools, including a user-activated feature called AutoMod. Management also hired more full-time trust and safety professionals to evaluate users’ reports and remove content that violated terms of service or the law.

Co-founder and former CEO Emmett Shear, who stepped down days before the March layoff announcement, championed trust and safety efforts, the people said.

In addition to streamlining and combining some units, Twitch will increase its reliance on outside suppliers to monitor content and police user activity. One such contractor is Majorel Group Luxembourg SA, a European technology consultant.

In 2022, Insider published a report alleging that workers at Majorel endured surveillance and overwork while reviewing gruesome content on behalf of TikTok.

In a statement to Bloomberg, Karsten König, an executive vice president at Majorel, said that the health and well-being of the company’s content moderators is a priority.

“We demonstrate this every day by providing 24/7 professional psychological support, together with a comprehensive suite of health and well-being initiatives that receive high praise from our people,” he said.

As part of its recent cuts, Twitch laid off the head of its law-enforcement response team, Jessica Marasa. Marasa was widely respected at Twitch for instituting innovative new policies, like the off-service conduct policy. That allows the company to remove the accounts of users who commit serious offenses elsewhere on the internet or in real life.

Marasa also led safety efforts at in-person Twitch events, including the TwitchCon conference, which attracted 30,000 attendees in San Diego last year.

“The public and politicians are ramping up scrutiny over social-media platforms’ ability to moderate disturbing, hateful and illegal material,” said Lo, the Meedan moderation lead.

The firings have left some functions and initiatives intended to tackle Twitch’s unique safety challenges up in the air, according to the people. The company was developing plans to help rehabilitate banned creators and educate them on responsible behavior or copyright protocols. It is also working to improve child safety on the platform through new products, the people said

©2023 Bloomberg L.P.